Table of Contents

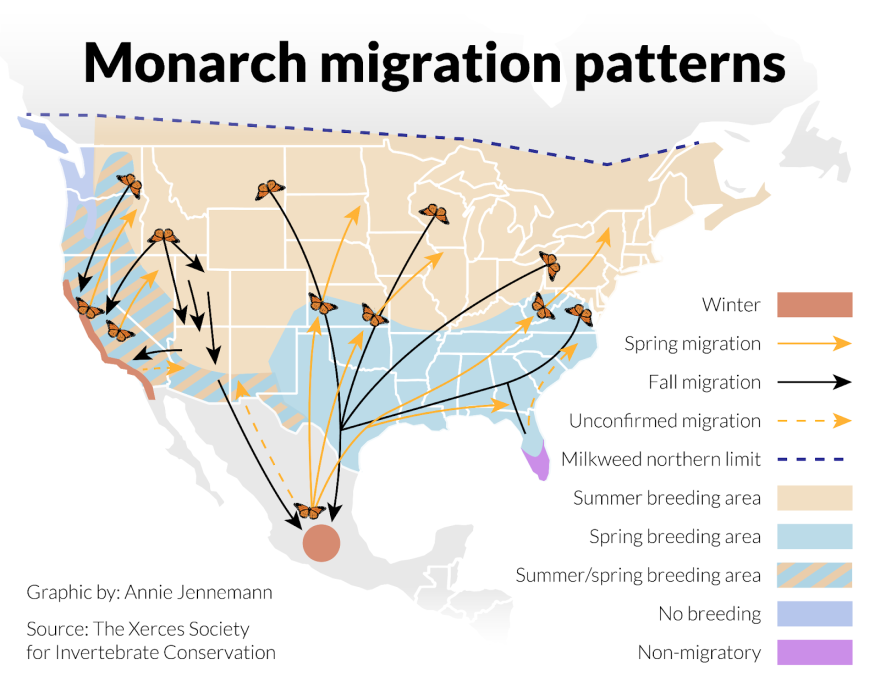

The Monarch butterfly, native to North America, is renowned for its distinctive characteristics. Each year, millions of Monarchs embark on an incredible migration journey from North America to Mexico, covering a staggering distance of up to 4000 km. Flapping wing aerial vehicles can offer significant advantages in energy efficiency and agility compared to conventional fixed or rotary wing types, whose lift-to-drag ratio deteriorates rapidly as their size is reduced.

Consequently, the flapping wing mechanism has been envisioned as a critical component for micro autonomous drones of the next generation.

While numerous bioinspired robots have been developed, advancements in control systems for FWUAVs lag behind those for more traditional unmanned aerial vehicles like quadrotors. This disparity is due to the complexity of modeling and controlling flapping-wing dynamics. Most existing models focus on high-frequency flapping of small wings, neglecting the intricate coupling between wing motion and body dynamics - key features of butterfly flight.

Geometric dynamic model and control

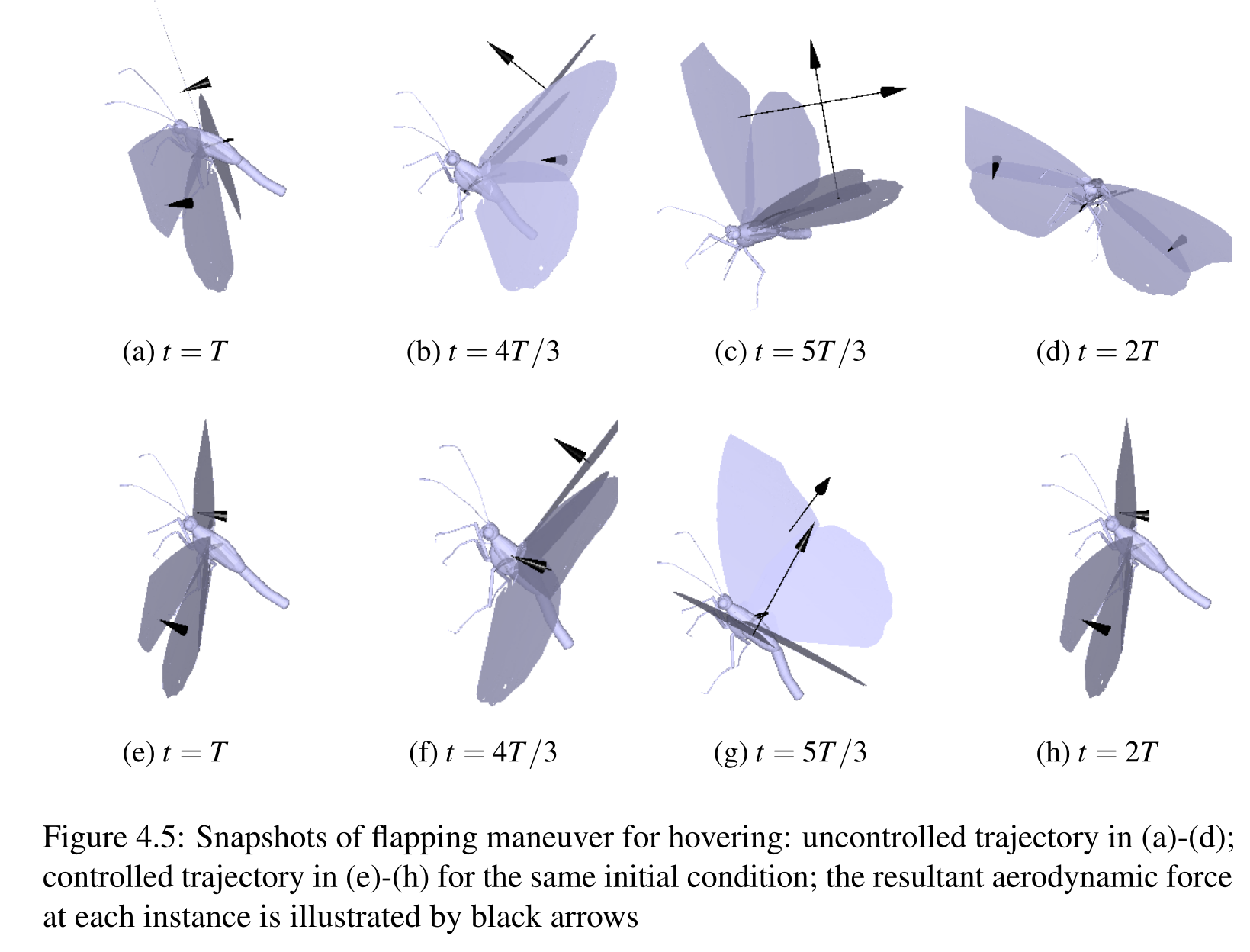

Inspired by the Monarch butterfly’s flight, a novel dynamic model has been developed to account for the effects of low-frequency wing flapping and abdomen undulation. This provides an elegant, global formulation of the dynamics, avoiding complexities and singularities associated with local coordinates. Next, an optimal periodic motion that minimizes the energy variations was constructed, and a feedback control system was proposed to asymptotically stabilize it according to the Floquet stability theory 1.

Constrained imitation learning from optimal trajectories

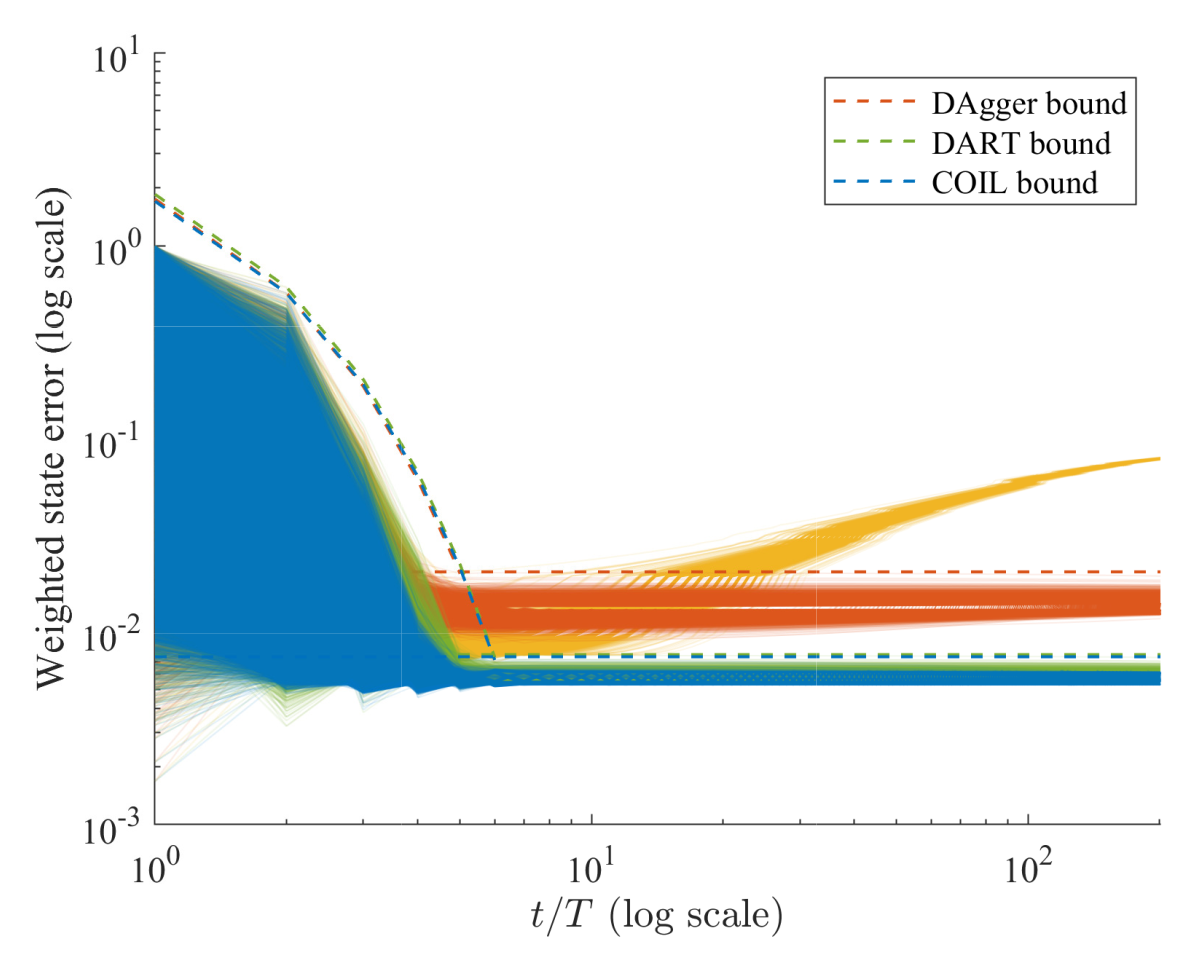

For the global motion of FWUAV which includes both translation and rotation of the body (6DOF), a periodic optimal controller was utilized after identifying specific wing kinematics parameters and their physical relationship to aerodynamics. To further improve computational efficiency, imitation learning was uniquely tailored to transform a set of optimal trajectories into data-driven feedback control. Compared with conventional methods, constrained imitation learning (COIL) eliminates the need to generate additional optimal trajectories on-line, while simultaneously improving stability properties 2.

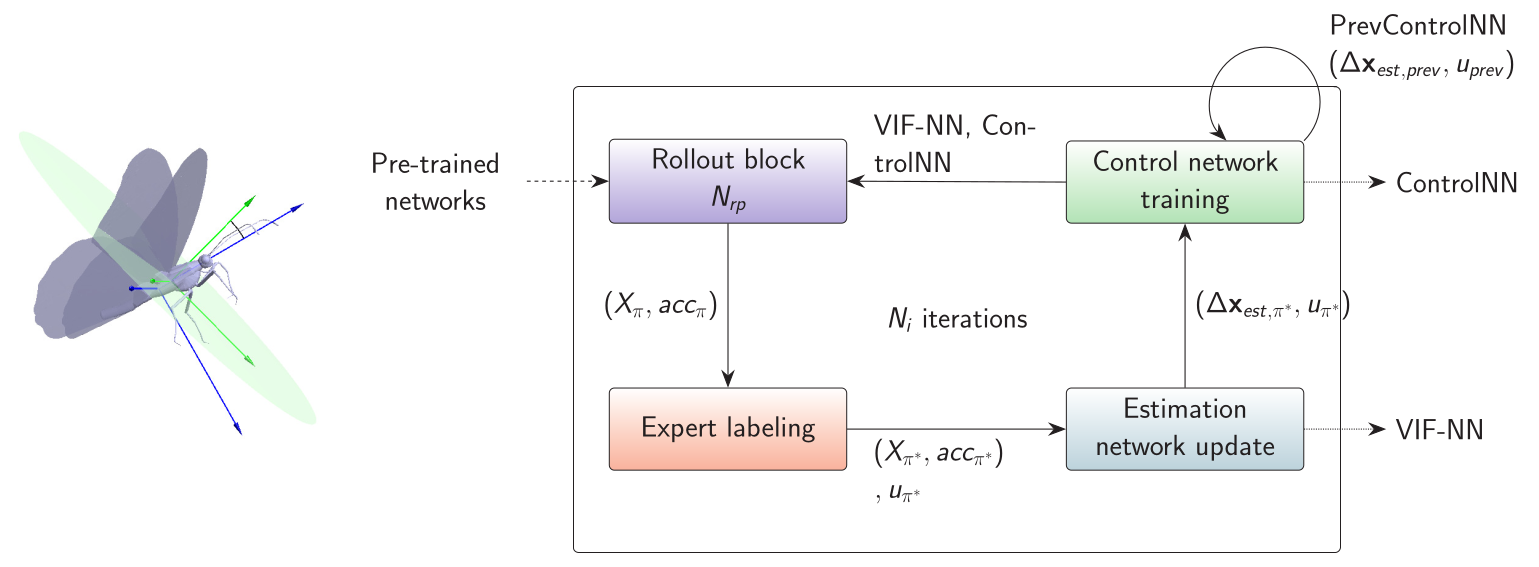

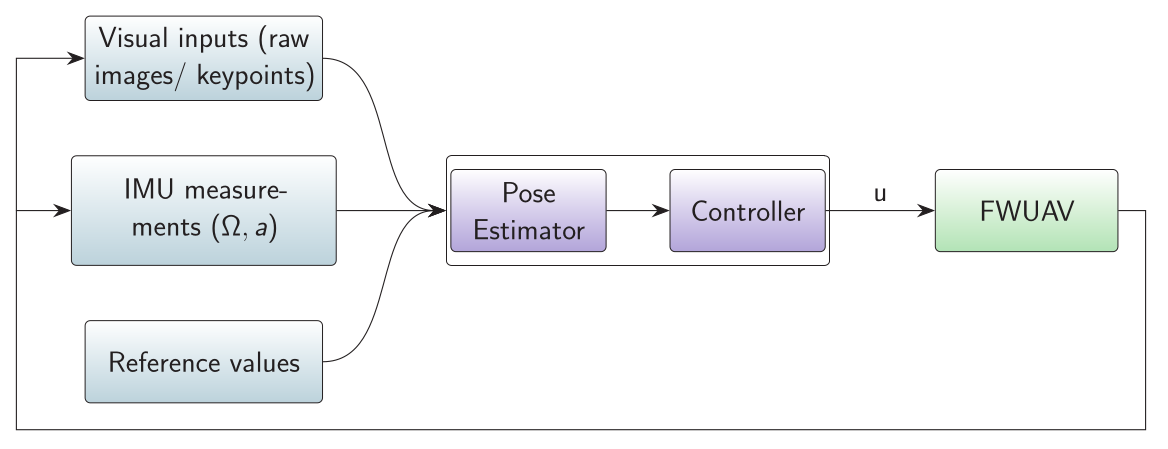

Modular control scheme using visual-inertial data

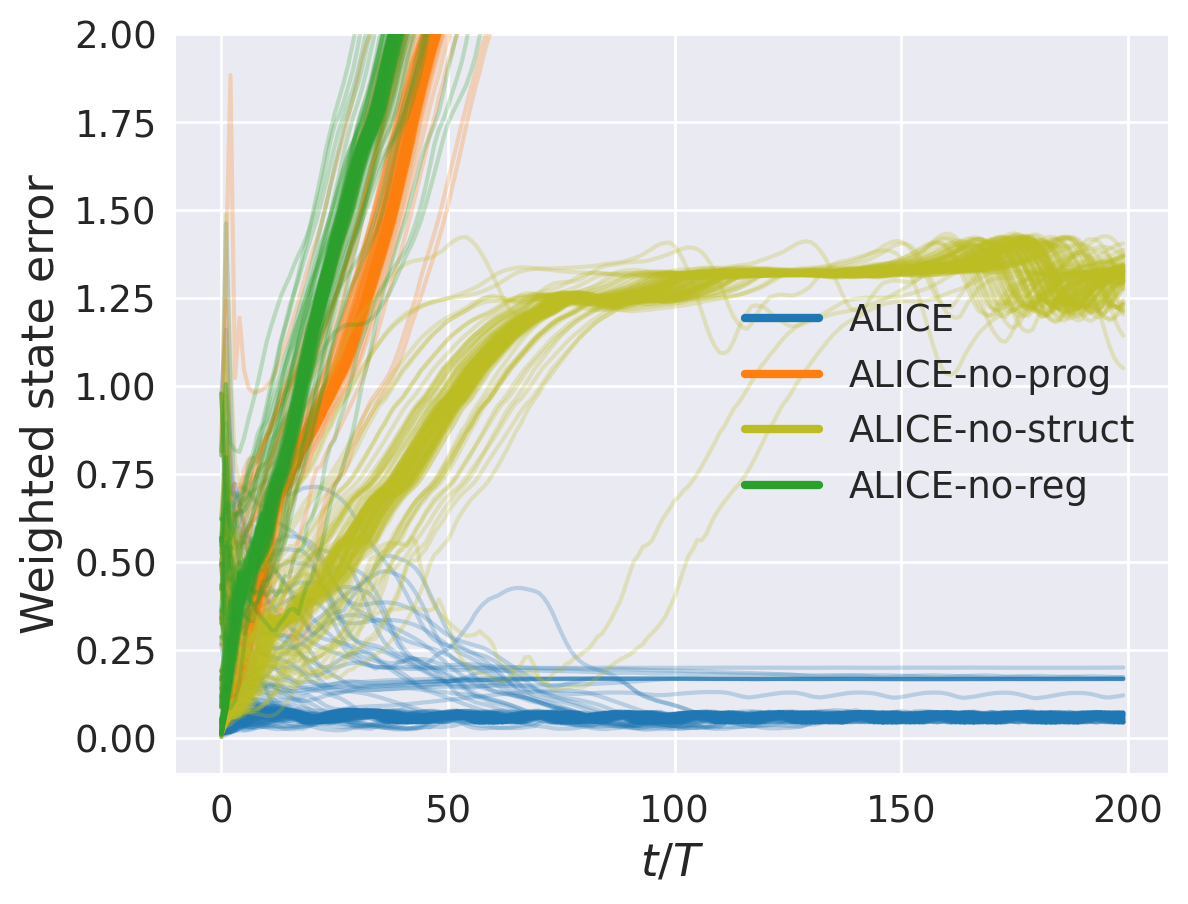

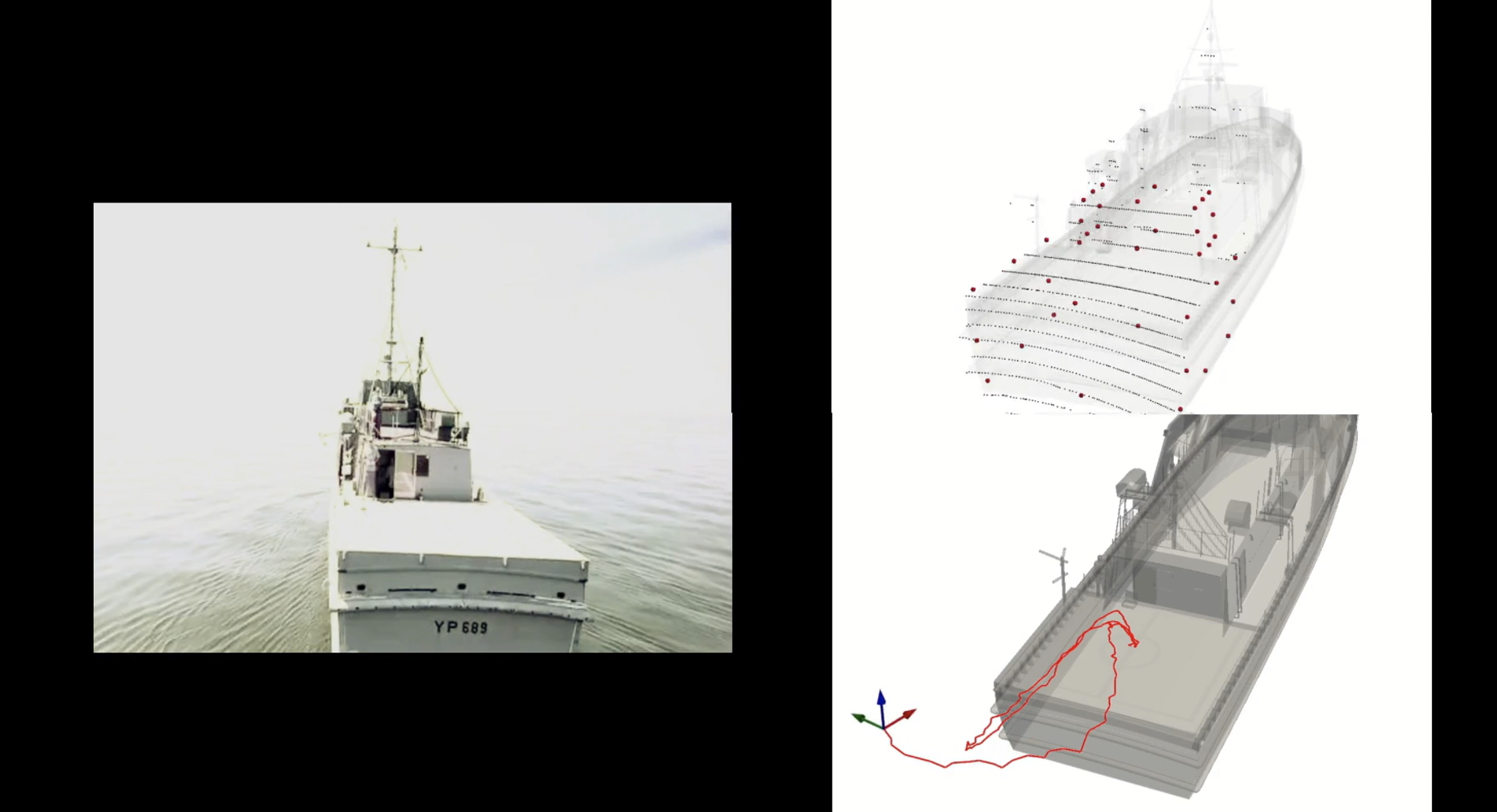

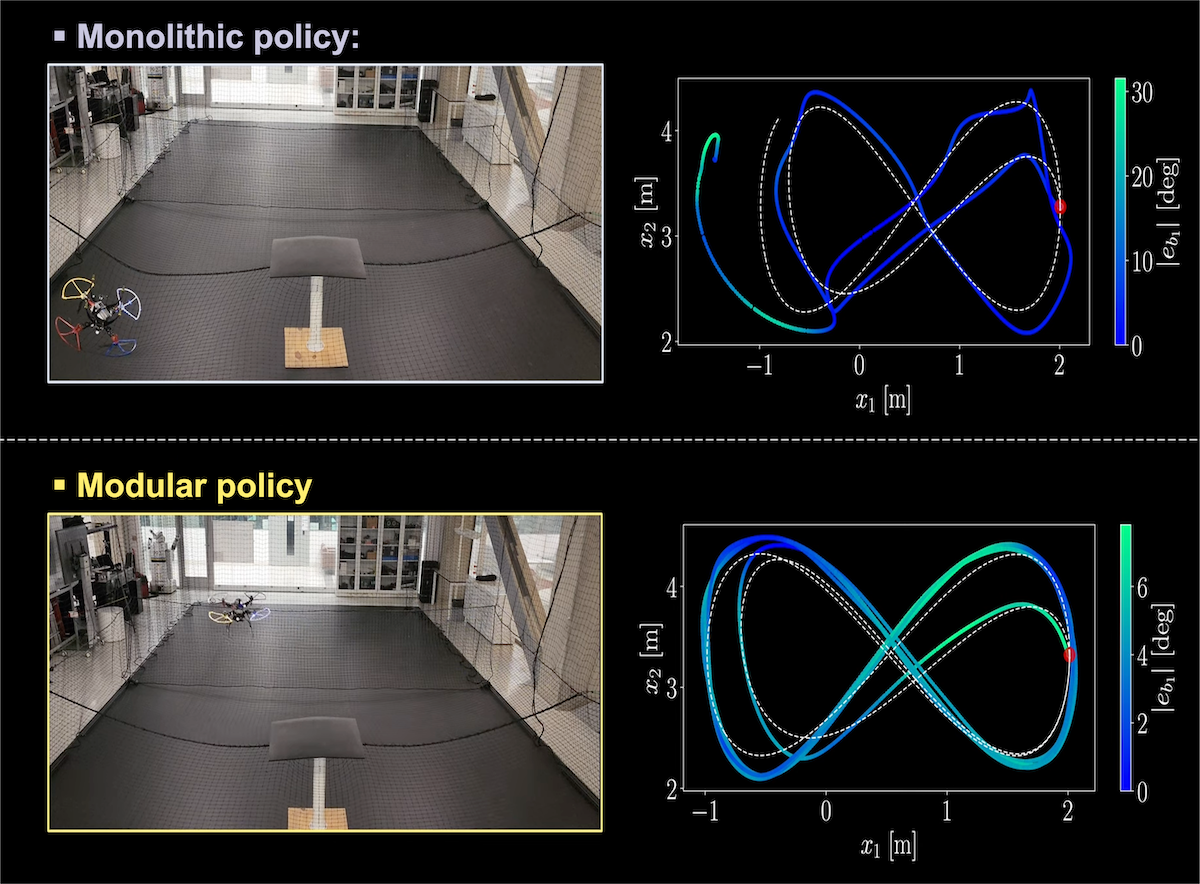

Finally, a vision-based control scheme was proposed to avoid estimating the state of the flapping wing aerial vehicle in real time. A deep neural pose estimator, based on a Siamese network, extracts robust features for better performance compared to traditional keypoint-based methods 3. Instead of training a monolithic network, we proposed a modular construction where a pose estimation network and a control network are concatenated, which were trained alternatingly to achieve the complex tasks of end-to-end perception and control efficiently. To improve convergence when combined with the controller, an alternating learning algorithm (ALICE) was presented to iteratively refine the individual neural networks so that ultimately the vehicle can be guided to perform given maneuvers.

This framework establishes the first nonlinear control system that stabilizes the coupled 6DOF longitudinal and lateral dynamics of FWUAVs without relying on the common assumptions of averaging or linearization. Possible future directions:

- Including flexibility of wings and fluid-structure interactions for improved modeling

- Learning-based aerodynamic models that balance accuracy with computational efficiency, further advancing FWUAV control systems.

1 : Tejaswi, K.C., Kang, C.K. and Lee, T., 2021, May. Dynamics and control of a flapping wing uav with abdomen undulation inspired by monarch butterfly. In 2021 American control conference (ACC) (pp. 66-71). IEEE.

2 : Tejaswi, K.C. and Lee, T., 2022. Constrained Imitation Learning for a Flapping Wing Unmanned Aerial Vehicle. IEEE Robotics and Automation Letters, 7(4), pp.10534-10541.

3 : Tejaswi, K.C., Lee, T. and Kang, C.K., 2024. Deep Neural Pose Estimation for a Flapping Wing Unmanned Aerial Vehicle with Visual-Inertial Sensor Fusion. In AIAA SCITECH 2024 Forum (p. 0948).

Start the conversation